The Tech Coalition, the group of tech companies developing approaches and policies to combat online child sexual exploitation and abuse (CSEA), today announced the launch of a new program, Lantern, designed to enable social media platforms to share “signals” about activity and accounts that might violate their policies against CSEA.

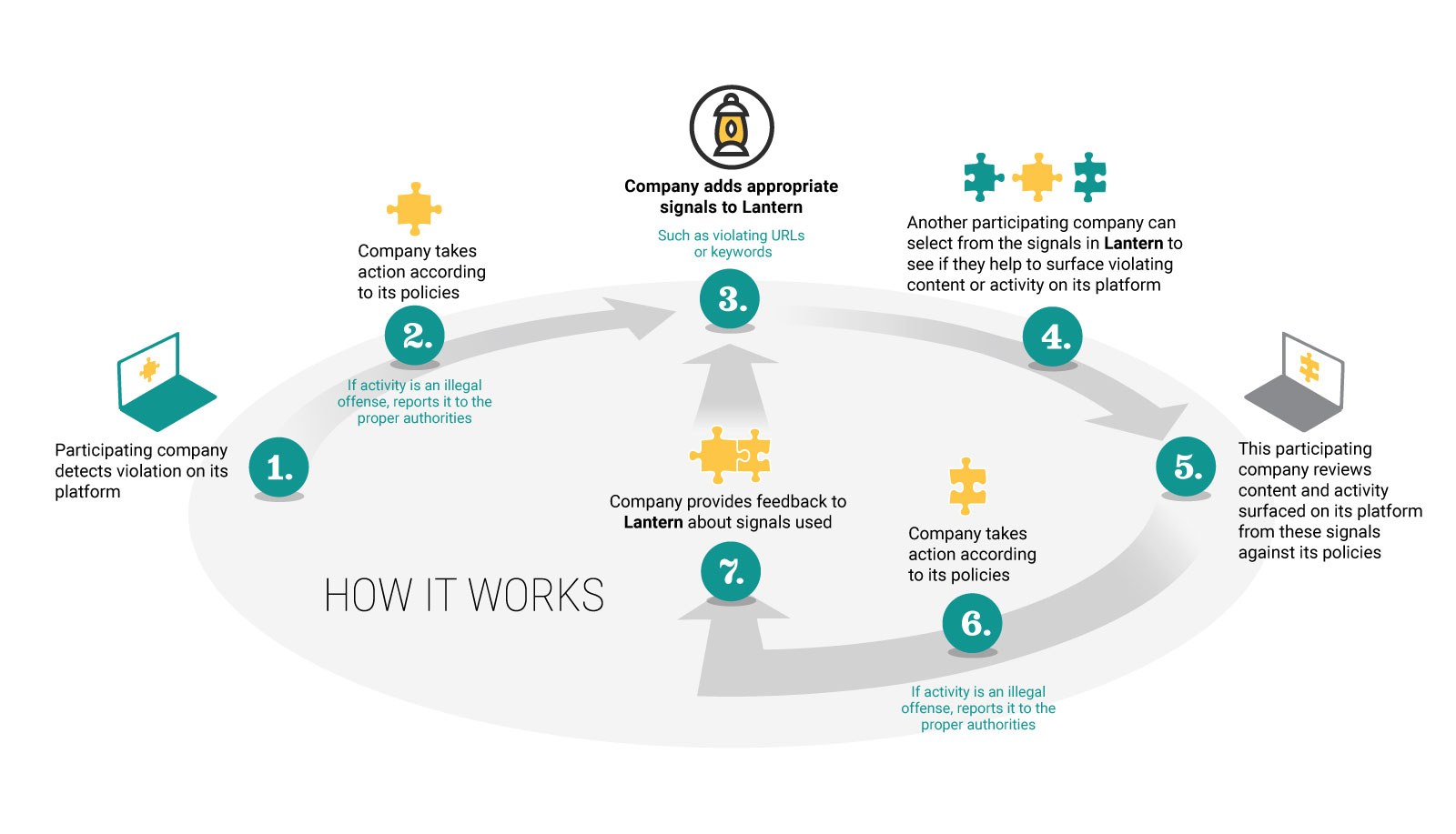

Participating platforms in Lantern — which so far include Discord, Google, Mega, Meta, Quora, Roblox, Snap and Twitch — can upload signals to Lantern about activity that runs afoul of their terms, The Tech Coalition explains. Signals can include information tied to policy-violating accounts, such as email addresses and usernames, or keywords used to groom as well as buy and sell child sexual abuse material (CSAM). Other participating platforms can then select from the signals available in Lantern, run the selected signals against their platform, review any activity and content the signals surface and take appropriate action.

During a pilot program, The Tech Coalition, which claims that Lantern has been under development for two years with ongoing feedback from outside “experts,” says that the file hosting service Mega shared URLs that Meta used to remove more than 10,000 Facebook profiles and pages and Instagram accounts.

After the initial group of companies in the “first phase” of Lantern evaluate the program, additional participants will be welcomed to join, The Tech Coalition says.

Image Credits: Lantern

“Because [child sexual abuse] spans across platforms, in many cases, any one company can only see a fragment of the harm facing a victim. To uncover the full picture and take proper action, companies need to work together,” The Tech Coalition writes in a blog post. “We are committed to including Lantern in the Tech Coalition’s annual transparency report and providing participating companies with recommendations on how to incorporate their participation in the program into their own transparency reporting.”

Despite disagreements on how to tackle CSEA without stifling online privacy, there’s concern about the growing breadth of child abuse material — both real and deepfaked — now circulating online. In 2022, the National Center for Missing and Exploited Children received more than 32 million reports of CSAM.

A recent RAINN and YouGov survey found that 82% of parents believe the tech industry, particularly social media companies, should do more to protect children from sexual abuse and exploitation online. That’s spurred lawmakers into action — albeit with mixed results.

In September, the attorneys general in all 50 U.S. states, plus four territories, signed onto a letter calling for Congress to take action against AI-enabled CSAM. Meanwhile, the European Union has proposed to mandate that tech companies scan for CSAM while identifying and reporting grooming activity targeting kids on their platforms.